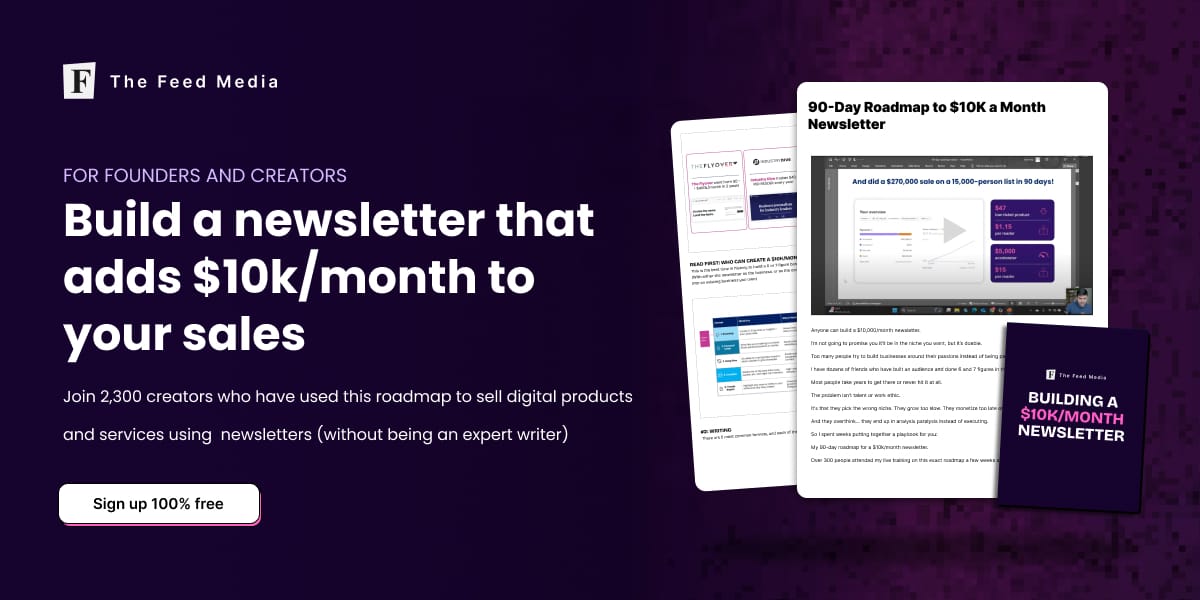

Get the 90-day roadmap to a $10k/month newsletter

Creators and founders like you are being told to “build a personal brand” to generate revenue but…

1/ You can be shadowbanned overnight

2/ Only 10% of your followers see your posts

Meanwhile, you can write 1 email that books dozens of sales calls and sells high-ticket ($1,000+ digital products).

After working with 50+ entrepreneurs doing $1M/yr+ with newsletters, we made a 5-day email course on building a profitable newsletter that sells ads, products, and services.

Normally $97, it’s 100% free for 24H.

Building and deploying machine learning models no longer has to mean managing infrastructure, provisioning instances, or worrying about idle capacity. With new serverless customization features, Amazon SageMaker is making it easier than ever to tailor AI workflows—without the operational overhead.

Here’s what this update means and why it matters.

What’s new in SageMaker serverless customization

Amazon has expanded SageMaker’s serverless capabilities to give teams more control while keeping the simplicity of a fully managed experience.

With the latest updates, you can now:

Customize serverless inference configurations

Fine-tune resource behavior without managing servers

Optimize performance for specific workloads

Pay only for actual usage, not idle capacity

This bridges the gap between “fully managed” and “fully flexible.”

Why serverless customization matters

Traditional ML deployments often force a trade-off:

Control vs. simplicity

Performance vs. cost efficiency

Serverless customization reduces that trade-off by letting you adapt inference behavior to your model’s needs—without standing up or scaling infrastructure yourself.

This is especially valuable for:

Spiky or unpredictable workloads

Event-driven AI applications

Rapid experimentation and iteration

Teams without dedicated ML ops resources

Faster experimentation, lower operational burden

With serverless customization, data scientists and ML engineers can:

Deploy models faster

Experiment with different configurations easily

Reduce DevOps involvement

Focus more on model quality and business outcomes

The result is shorter development cycles and quicker time to value.

Cost optimization built in

One of the biggest advantages of this update is cost control.

Because the service is serverless:

You’re billed only when inference runs

There’s no cost for idle instances

Scaling happens automatically

For organizations running AI at scale—or just getting started—this can significantly reduce cloud spend.

Final Thoughts

Amazon SageMaker’s new serverless customization features reflect a clear trend in AI platforms: more power with less complexity. By combining flexibility, automation, and usage-based pricing, SageMaker continues to make production-grade AI accessible to more teams.

If you’re building, deploying, or scaling machine learning models on AWS, this update is worth paying attention to.